On-Device Pose Detection for Workout Tracking

On-Device Pose Detection for Workout Tracking. 1st place at UChicago Class Hackathon out of 60 people.

Motivation

Traditional workout tracking solutions rely on expensive wearables, external sensors, or manual counting—all of which present barriers to accessibility and user engagement. We wanted to build a solution that could accurately track exercise performance using just a smartphone camera, making fitness tracking accessible to everyone without additional hardware.

From a learning perspective, we were excited to explore the challenge of fitting machine learning models into resource-constrained mobile devices and understand the performance trade-offs involved in on-device inference. This project gave us hands-on experience with optimizing ML models for real-time execution on consumer hardware.

Functionally, we aimed to go beyond simple rep counting. GymPT tracks exercise form quality in real-time—if your form is incorrect (e.g., insufficient squat depth, improper elbow position), the system won't count the repetition. This ensures users not only track their workouts but also maintain proper technique to prevent injury and maximize effectiveness.

Technical Implementation

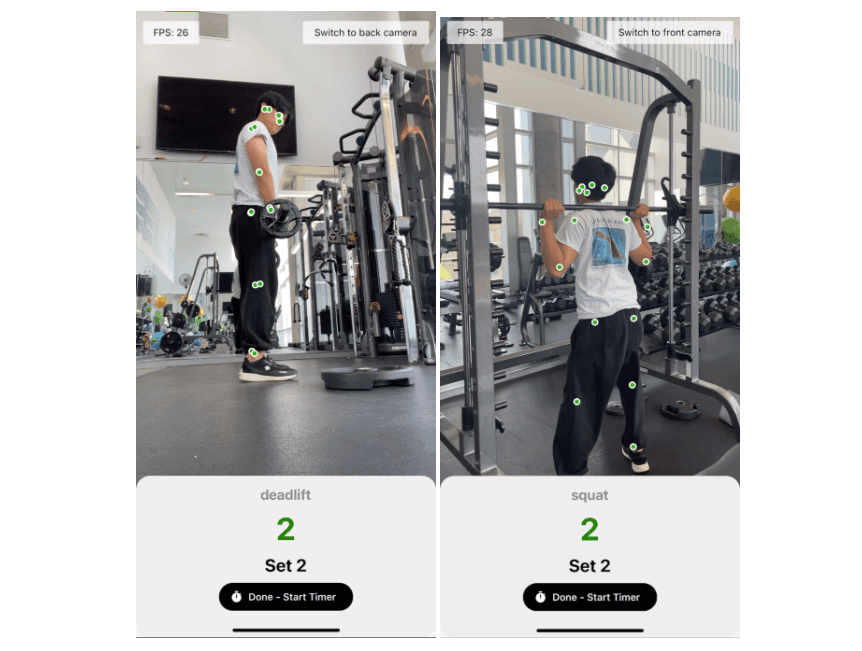

We built a full-stack mobile app using React Native and TensorFlow.js that performs real-time pose estimation entirely on-device. The system uses computer vision to detect and track key body joints (shoulders, elbows, hips, knees, etc.) through the phone's camera, eliminating the need for external sensors or wearables.

At the core of our solution is a joint-angle tracking system that monitors the angles between connected joints in real-time. We designed a simple yet effective state machine over these joint-angle features to detect exercise phase changes (e.g., the "up" and "down" phases of a squat or bicep curl) and automatically count repetitions. This state machine approach helps debounce miscounts and ensures accurate tracking across different exercise types.

Performance & Results

Through careful optimization, we achieved impressive on-device performance:

- ~24 FPS on-device inference (tested on iPhone 12)

- ~96% rep-count accuracy across common lifts including squats, bicep curls, and shoulder presses

- Real-time feedback with minimal latency, providing instant visual cues to users

Winning the Hackathon

GymPT won 1st place at the UChicago Class Hackathon out of 60 participants for Mobile Computing Class. What set our project apart was the combination of:

- Practical utility: Addressing a real problem that anyone who works out can relate to

- Technical sophistication: Successfully implementing real-time computer vision and machine learning on mobile devices

- Polished execution: A fully functional live demo with smooth UI/UX that showcased the technology working reliably across multiple exercise types

- Accessibility focus: Making advanced fitness tracking available to anyone with a smartphone, without requiring expensive equipment