Post-training Video Models for Egocentric Video Generation

Post-training video generation models to generate egocentric video creation for robotics synthetic data generation.

Ilya Sutskever claimed, "Data is the fossil fuel of AI." Specifically, internet and web text have been the fuel powering the exponential growth of LLMs. While some argue we're running out of data to further train these models, the rapid advancement of LLMs has clearly demonstrated the capability of transformers and the empirical validity of scaling laws.

Robotics doesn't have that luxury. There is no web-scale corpus of "doing." The world isn't a dataset you can scrape at 2 a.m. Motion, contact, objects, people - each interaction is expensive to witness and even more expensive to record with fidelity. Not to mention that physical AI must run small unlike LLMs, which can burst from a data center, a robot's brain often has to live on-device.

So how do we create a fossil fuel for Robotics?

Currently, the field leans on teleoperation: a person puppeteering a robot while the robot watches and learns. Each robot gets, at best, 24 hours a day of potential operation, but people fatigue long before that and in practice, robots "fatigue" faster because batteries drain, joints heat up, sensors drift, maintenance intervenes.

Then we have simulation, also known as digital twins, that can run 10,000× faster than teleoperation with tens of thousands of rollouts in parallel on a GPU. We can mirror real robots in virtual space and rehearse them in virtual environments. Build the twin, then spawn variations. Systems like GR00T mimic help seed these worlds and behaviors.

But we have another problem: how do we generate this scene?

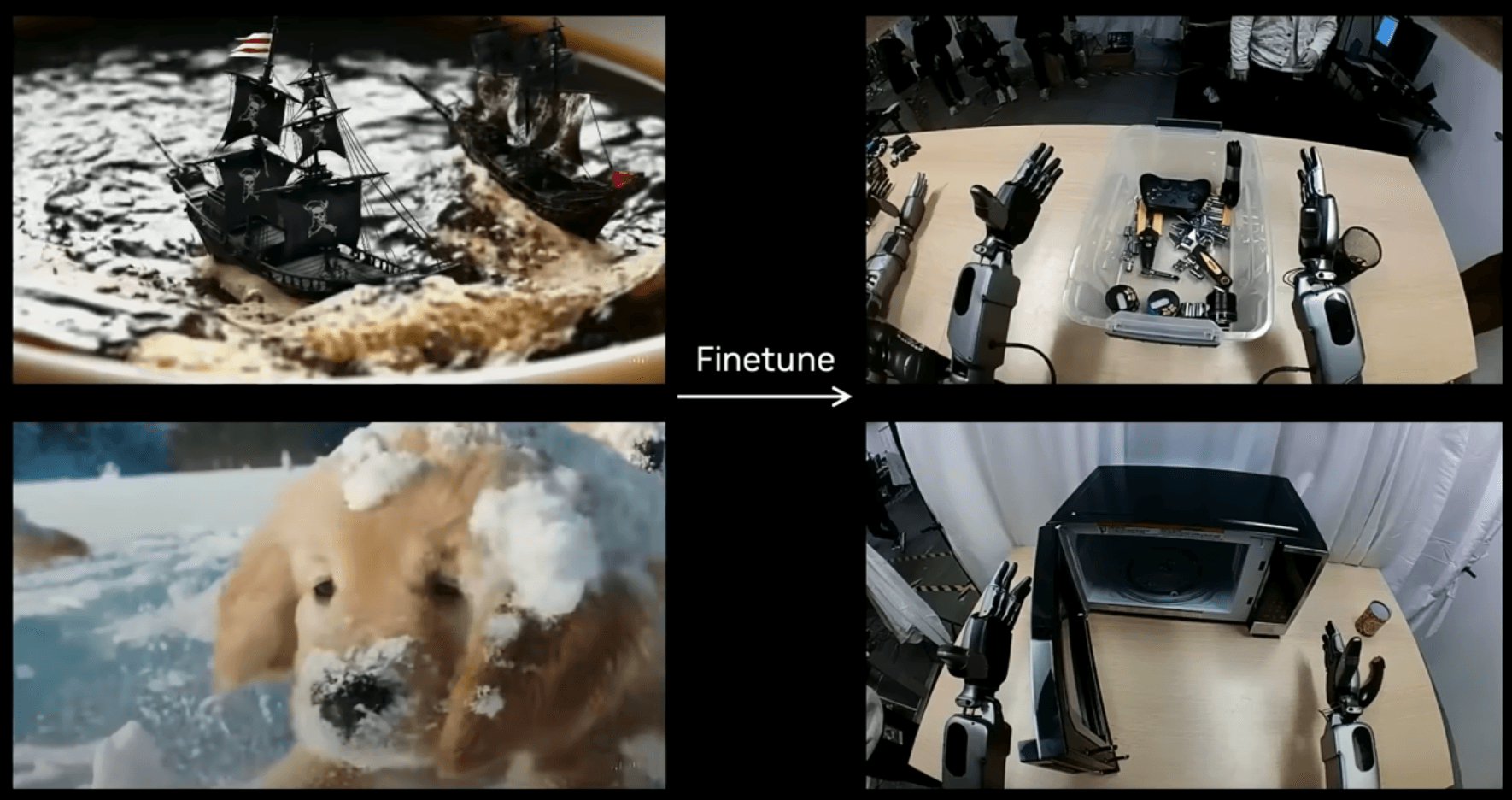

The world is closer to this image than clean simulation environemtns. There's food, fire, and breakable glass, forks, drinks, and plants, each with different textures. It's hard to build a simulation that matches this real-world complexity and this is where video-generation models can help. These models have been getting better at following the physical rules of the world (e.g., WorldSimBench: Towards Video Generation Models as World Simulators). If we can make a video-generation model perform tasks from an egocentric perspective, we can compensate for the lack of environmental diversity that current data-collection methods (teleoperation and simulation/digital twins) struggle to provide. To that end, I'm post-training state-of-the-art, open-source vision models to follow prompts in egocentric views as a way to address the diversity shortage in today's physical-AI data.